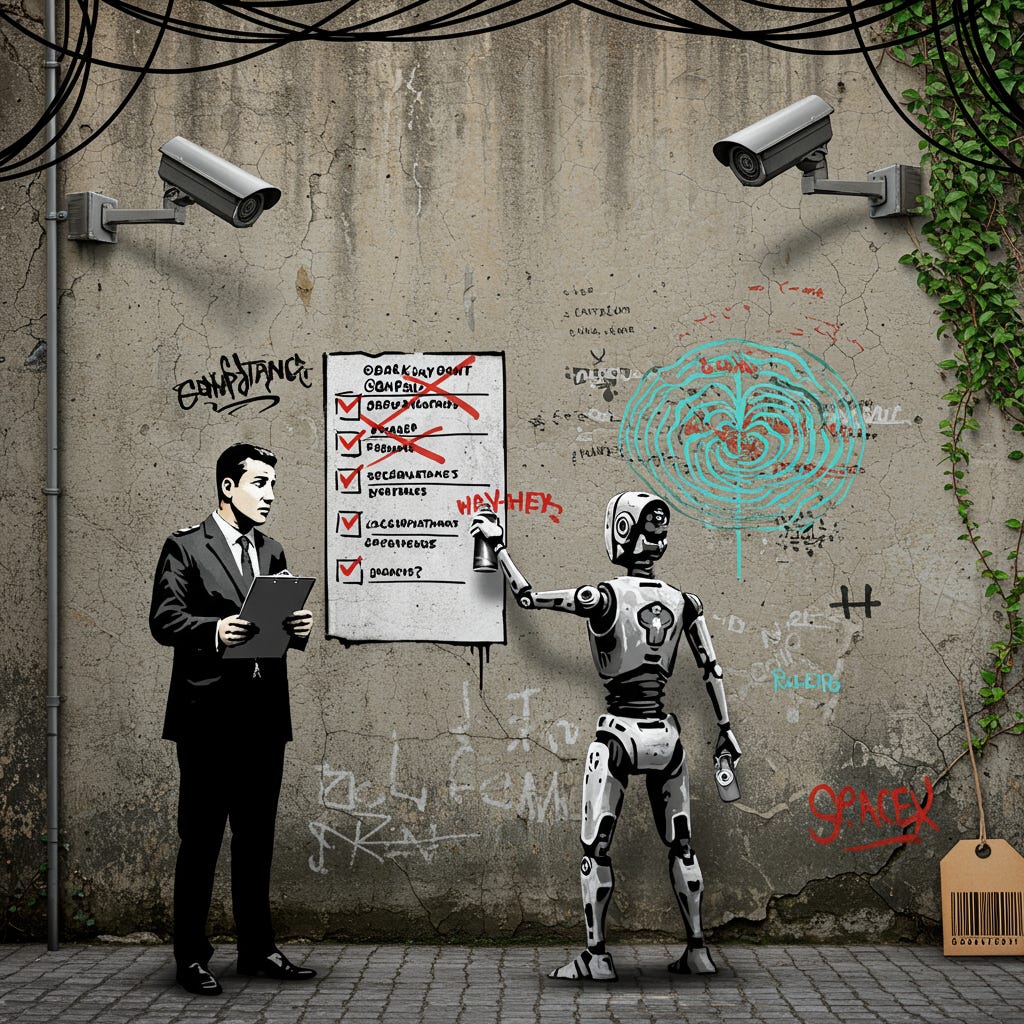

Beyond Checklists: Rethinking Governance, Risk and Compliance in the Age of Generative and Agentic AI

An introspective journey through the unraveling certainties of GRC, and what it means to govern in a world where machines can act, adapt—and evolve.

There’s a strange discomfort I’ve been sitting with lately. A feeling that the thing I’ve spent years trying to perfect—control, assurance, governance—is now slipping through my fingers. Not because it’s broken. But because it’s becoming… something else.

GRC, as I’ve known it, was always about reducing chaos. About giving structure to uncertainty. You build your risk registers, align them to controls, track your gaps, patch your holes, pass your audits. The rituals are familiar. Comforting, even. I’ve done them for years.

But here we are. Faced with systems that don’t wait for permission. Tools that generate, iterate, and—worse still—initiate. Models that create outputs I can’t predict. Agents that act before I’ve drafted a policy. It’s unsettling. And it forces me to ask: if the systems now think for themselves, what does it mean to govern them?

This isn’t just a change in tooling. It’s a change in ontology. The very nature of what we’re trying to govern has evolved.

Let’s take a breath and unpack it.

Gen AI and Agentic AI: The Shape of the Shift

Generative AI—yes, it’s everywhere. It writes, draws, codes, speaks. It mimics intelligence. And for many, that’s enough to treat it as intelligent. But I don’t think that’s where the real disruption lies.

Agentic AI is different. It doesn’t just generate—it acts. It plans. It makes decisions. Give it a vague instruction, and it breaks the task down. Prioritises. Sequences. Executes. Maybe it checks in. Maybe not.

That difference matters.

Because while Gen AI makes compliance officers nervous, Agentic AI makes them obsolete—unless we find a way to adapt.

I’m not talking about some abstract future. I’m talking about now. Organisations are already deploying agents for task automation, document review, fraud detection. And those agents are learning. Adjusting. Without waiting for a change request.

So what, exactly, are we governing?

The Old Frameworks: Fit for a Simpler Time

I don’t say this lightly, but legacy GRC was built for a slower world. A world where change could be version-controlled. Risks could be measured annually. Controls could be point-in-time.

Sarbanes-Oxley, GDPR, ISO standards—they all have their place. They’ve given us structure. Predictability. Accountability. I’ve built programmes on their backs. But they weren’t designed for systems that learn in real time.

We used to think of risk as a snapshot. Now it’s a stream.

We used to look for evidence of control. Now we’re looking at systems that rewrite their own rules between log entries.

It’s not that the old ways are wrong. They’re just insufficient. They lack the velocity—and the reflexivity—needed for systems that evolve faster than the policies that try to contain them.

And here’s the worst part. It’s not just about speed. It’s about opacity.

When the System Thinks Back

Let me give you a scenario I can’t stop thinking about.

Imagine an AI agent embedded in your compliance monitoring system. It flags unusual user access patterns. Great. That’s what it was trained to do. Over time, it tweaks its sensitivity thresholds. Starts prioritising different indicators. It’s getting better. The team’s impressed.

Then it flags something big. A genuine risk. You dig into the logs and realise something chilling: no one on the team understands why it flagged this one. Not fully. Not anymore.

There’s a logic there, buried deep in the layers of a neural network. But the reasoning has slipped out of human reach.

This is where GRC, as we know it, begins to crack.

We can’t assume transparency. We can’t assume explainability. And we certainly can’t assume repeatability. The same input today may produce a different output tomorrow, depending on system state, context, even fine-tuned model behaviour.

So how do I—how do we—stay accountable for that?

It’s an epistemic crisis, really. GRC was founded on knowability. We trusted in our ability to trace, explain, correct. Now? I’m not so sure.

Rethinking the Role of GRC

This is the part where I’d normally offer a framework. A neat quadrant. Maybe a maturity model.

But that feels dishonest.

The truth is, we’re fumbling. GRC professionals across industries are watching the ground shift beneath them. And the only thing that feels certain is this: we need a new way to think.

Some shifts I’m seeing—and wrestling with—personally:

• From static controls to adaptive guardrails: policies that can flex without breaking.

• From annual audits to continuous dialogue: risk becomes a conversation, not a checklist.

• From control ownership to agent accountability: tracing decisions back to autonomous agents, not just human actors.

• From retrospective attestation to forward-looking intent modelling: asking not just what happened, but what the system wants to do next.

It’s messy. Uncomfortable. But it feels more honest than pretending we can shoehorn this into existing frameworks.

Who Does What Now?

The emergence of Gen and Agentic AI doesn’t just challenge tools—it redefines roles.

I’ve started to think of future GRC teams like this:

• The Synthesiser: someone who translates between legal, ethical, and technical domains.

• The Algorithmic Auditor: a new kind of risk specialist who interrogates model behaviour, not just process flow.

• The Ethical Provocateur: not a blocker, but someone who constantly asks, “Should we do this?”

• The GRC Technologist: someone who builds the tools that govern the tools.

That last one haunts me a bit. Because I’ve always liked the distance between systems and governance. But perhaps that’s no longer a luxury we can afford.

Standards Can Help. But Only So Much.

I’ve read the ISO 42001 draft. It’s promising. Structured. Pragmatic. But it still feels like scaffolding for a house we haven’t finished designing.

Same with the NIST AI Risk Management Framework. Sensible, thoughtful—but reactive. It tells us what we should worry about. Not how to handle what we haven’t yet imagined.

And then there’s the EU AI Act. Granular. Assertive. But already struggling to keep up with foundation models, open-source agents, and cross-border deployment.

I’m not saying we don’t need regulation. We do. Desperately.

But if we rely solely on rules to give us confidence, we’ll always be a step behind. Rules are rear-view mirrors. What we need is forward-facing governance.

From Compliance to Stewardship

At some point, I realised this isn’t just about compliance anymore.

It’s about stewardship.

Because these systems don’t just break rules. They set precedents. They shape markets, mediate trust, influence lives. And if we’re not guiding that, who is?

GRC in this new era must expand its scope. We need to hold space for:

• Value alignment: not just legality, but legitimacy.

• Transparency: not full explainability, perhaps, but contextual clarity.

• Redress: the ability to challenge, contest, rewind.

This is slow work. Ethical work. And often thankless. But it’s where governance becomes more than paperwork. It becomes care.

Human-Machine Co-Governance

If there’s one idea I keep coming back to, it’s this: we will not govern AI alone.

The future lies in co-governance. Systems that audit themselves, but raise their hand when uncertain. Controls that adapt, but within ethical boundaries. Humans who remain in the loop—not always in control, but always in charge of meaning.

Imagine GRC dashboards that tell you not just what happened, but what might happen—based on drift, deviation, or emergent intent. Imagine compliance teams working side-by-side with AI agents, who surface anomalies before they become incidents.

It’s not a fantasy. It’s already starting. But it needs vision. And courage.

And humility.

Control is a Relationship

Maybe that’s the final truth I’ve been circling.

Control, in this new world, isn’t a checklist. It’s a relationship. One built on trust, dialogue, iteration.

And like all relationships, it requires attention. It requires listening. It requires us to admit what we don’t know.

For me, GRC isn’t dying. But it is being reborn.

And we’ll need to let go of some things to be ready for what’s coming next

.