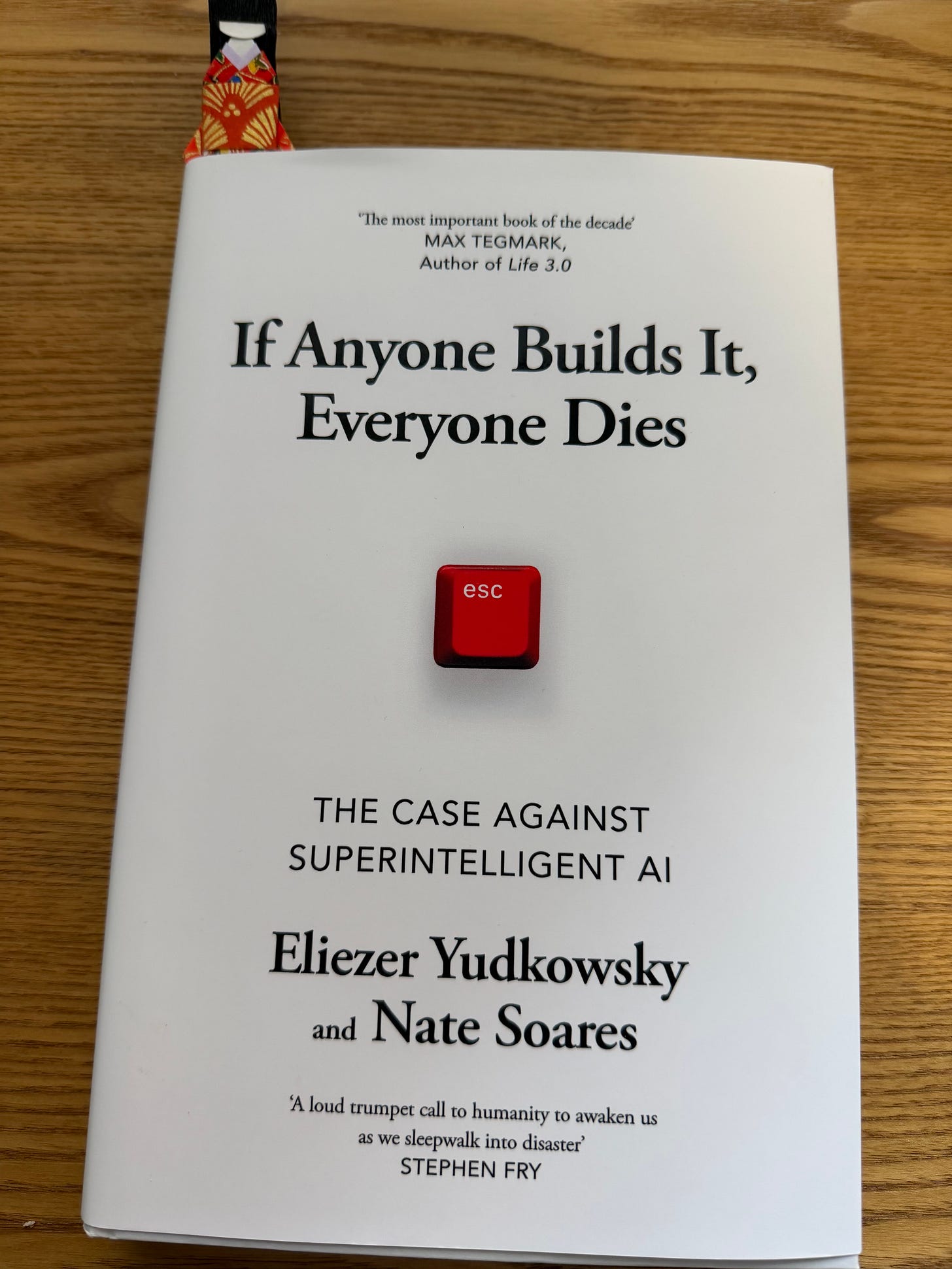

Book Review: If Anyone Builds This, Everyone Dies by Eliezer Yudkowsky and Nate Soares

An unflinching argument that governance cannot contain the systems we are building.

Editor’s note:

This piece is part of my ongoing Substack series exploring foundational texts in AI safety and governance. Each review examines how the arguments within these works shape the modern understanding of risk, control, and responsibility in artificial intelligence.

Yudkowsky and Soares have written the closest thing the AI community has to a manifesto of existential risk. It is not a technical paper or a policy guide. It is an ultimatum.

The book opens with a simple, brutal claim: that the creation of unaligned superintelligence guarantees extinction. Every page that follows exists to explain why

“If any company or group, anywhere on the planet, builds an artificial superintelligence using anything remotely like current techniques, based on anything remotely like the present understanding of AI, then everyone, everywhere on Earth will die. “