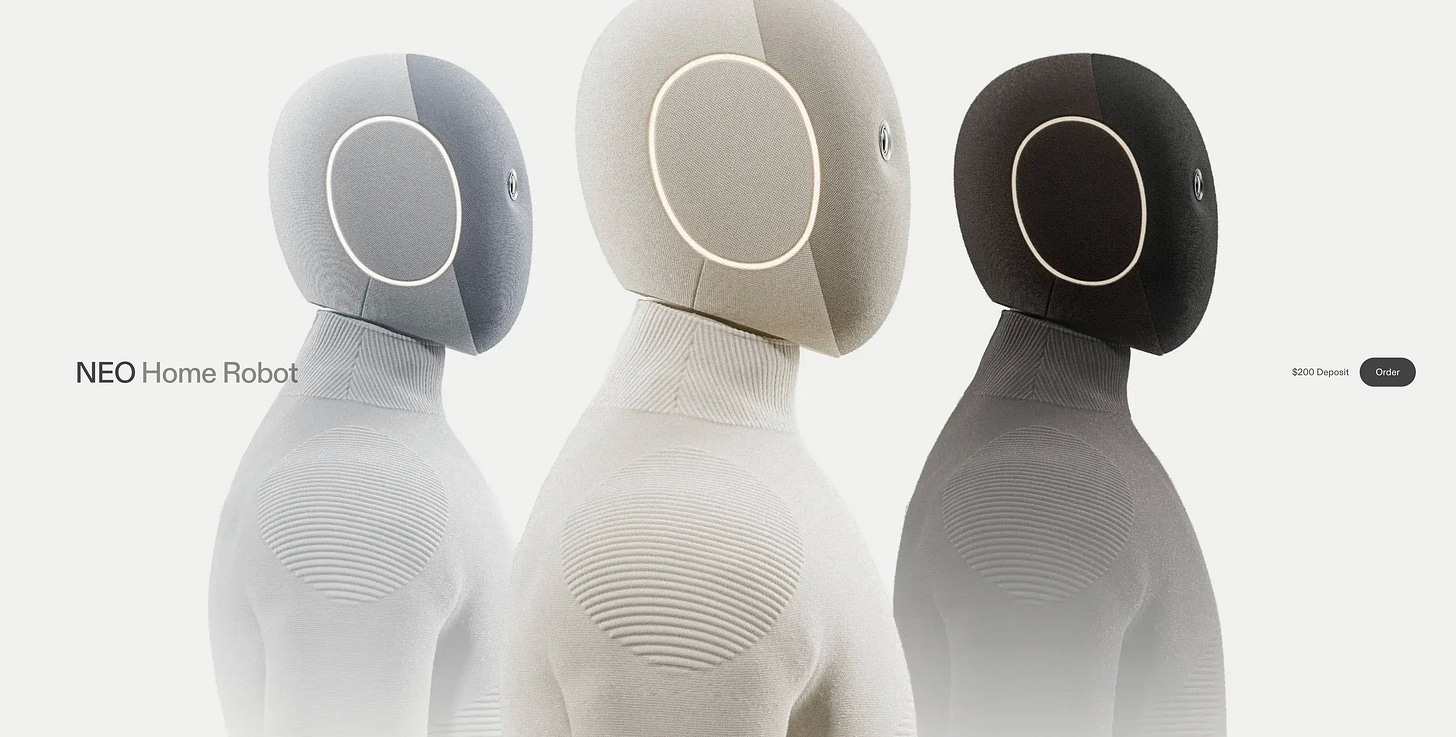

The $20,000 Humanoid That Wants to Live in Your Home

1X Technologies’ NEO robot promises to fold your laundry and chat like ChatGPT — but behind the marketing shine lies a human-in-the-loop system, data privacy questions, and an untested vision of domes

The $20,000 humanoid that wants to live in your home

Pay your deposit here: Pay your deposit $200 - I certainly WONT be

Here’s a detailed breakdown of what I found on the NEO Home Robot what it claims, what it delivers (so far), and where the caveats lie. I’m looking at this through the lens of AI ethics, robotics governance, and regulatory risk.

✅ What the company claims

Here are the headline specs and promises from 1X and the tech media:

Marketed as a consumer-ready humanoid robot for home use.

Pre-orders are live, with first US deliveries expected in 2026.

Two pricing models: a one-time payment of US$20,000 (“Early Access”) or a subscription model at US$499/month.

Claimed features include:

Basic household tasks: folding laundry, organising shelves, fetching items, turning lights off, opening doors.

An onboard large language model (LLM) for conversation, memory and personalisation.

Vision + audio intelligence to recognise context and respond to visual or spoken input.

Hardware specs: 5ft 6in tall, 66 lb weight, lift capacity around 150 lb, soft polymer body, low noise (22 dB).

Safety design: tendon-drive mechanisms and a “machine washable” body suit.

What to be cautious about, or what feels like marketing fluff

From an ethics and risk standpoint, a few issues stand out:

“Consumer-ready” is aspirational, not proven.

Shipping isn’t until 2026, and most capabilities remain unverified. The Wall Street Journal’s early test found that NEO “is still part human” meaning a remote operator is often guiding the robot.

Data, privacy and remote-operation risk.

Reports suggest “expert mode” will allow remote human operators to take over when NEO gets stuck. That means live video from inside homes, possible data retention, and consent complexities. The company’s FAQ hints at data sharing but leaves governance details vague.

The gap between hype and capability.

Media demos showed it struggling with routine tasks folding a shirt, closing a dishwasher often with human assistance. It’s an impressive prototype, but far from a general-purpose home robot.

Regulatory, safety and liability gaps.

A humanoid robot moving and manipulating objects in private homes introduces risks: collisions, harm to pets or children, data collection, or even emotional attachment. Safety design is claimed, but oversight and regulatory mapping are unclear.

Why this matters

This is a turning point a move from industrial and research robotics into the domestic sphere. The ethical stakes shift with it.

The subscription and remote-operator model means NEO is as much a service as a device. That raises questions around who controls updates, how learning data is used, and where liability sits if things go wrong.

The “human in the loop” approach is interesting it provides safety and fallback, but it also creates new governance issues: consent, transparency, and accountability.

And if you zoom out, there’s a broader analogy here. Imagine a similar model appearing in financial services robotic or AI assistants in customer homes, interacting with sensitive information. The governance and risk implications would be identical: oversight, explainability, data protection, liability.

My verdict

“Plausible prototype heading to market, but with considerable caveats and early-adopter risk.”

Realistic: Serious funding, credible specs, and a visible roadmap.

Hype: The “home butler” narrative is overstated; it’s not autonomous yet.

Risk: Privacy, data governance and safety oversight are still open questions.

We’re getting closer to humanoids that live among us but not as equals, and not without oversight.